The Collapse of Model Collapse

Critics of synthetic data seem to have manufactured their own evidence

Oh the irony is rich and deep, my gentle readers. Come along as I take a close look at a paper making the rounds in policy circles, on the topic of model collapse due to using synthetic data to train a large language model.

Let’s set the table. Large Language Models, also known as LLMs, are what most normies think of when they hear “AI.” It’s products like OpenAI’s ChatGPT and Anthropic’s Claude, which fall under the larger heading of generative AI — artificial intelligence that generates an output, whether that’s a picture or an essay or computer code. These models are trained using immense amounts of raw data: Wikipedia, Reddit entries, old posts on Usenet, Project Gutenberg, stuff like that, and that’s just text. Anything on the internet can usually be scraped and shoved into a big pile. Then throw in transcripts of TV shows and podcasts, pictures and videos, every type and manner of content that we’ve ever digitized as a human species.

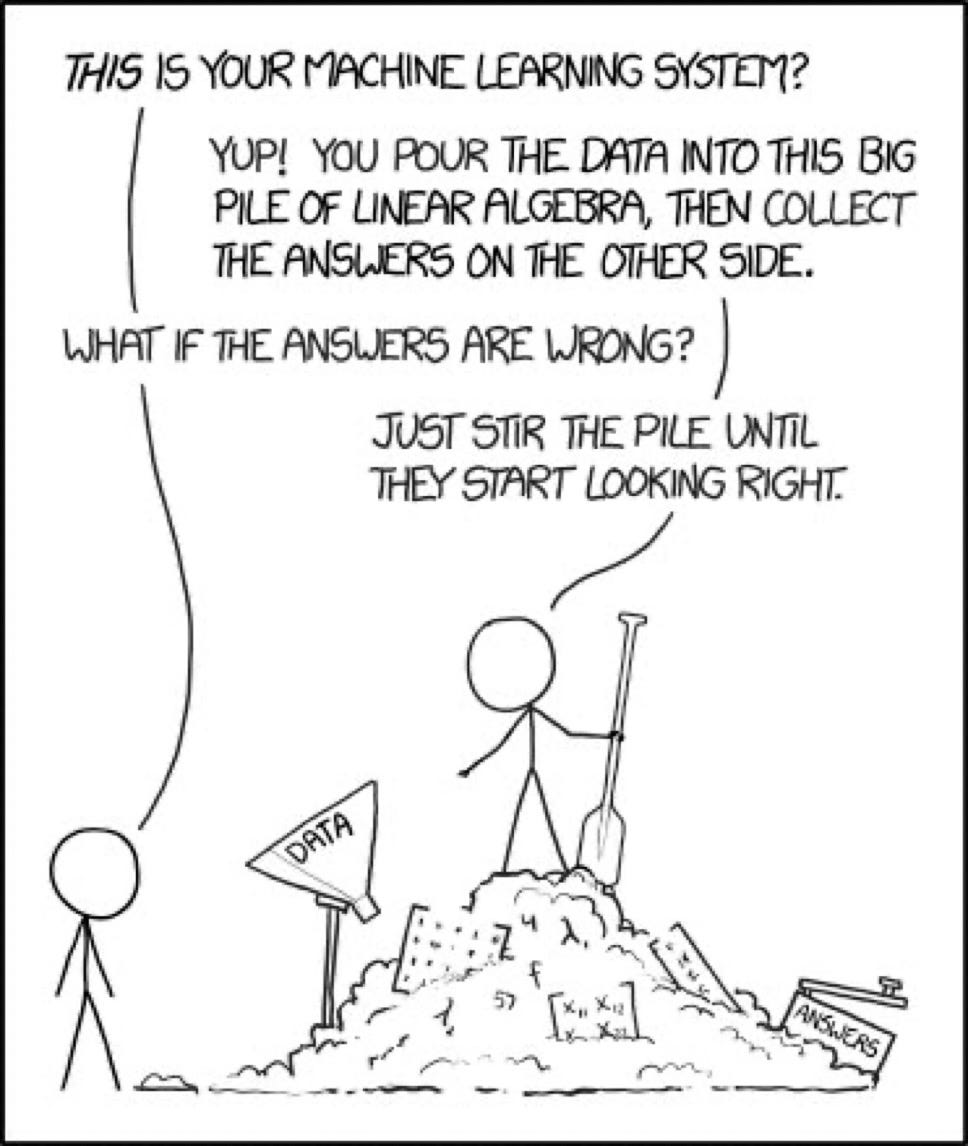

Then we train. Which means to run some vector algebra over the symbolic representations and do a bunch of matrix multiplications, which is really fascinating but let’s put that aside for now. On the other side, and LLM pops out. As the ever-insightful XKCD comic puts it,

What happens when we run out of data? To a normie, this sounds absurd. The internet is so vast and massive, especially when we think of non-English content, that we simply can’t comprehend ever getting to the edge of it, like reading every book in a library. But computers aren’t like that, they just consume and ingest and devour. Text, too, is really quite small in data terms. I found a digital copy of the Odyssey online in the original Greek and this foundational text of western civilization is a mere 465 kilobytes. Computers are just really good at storing and processing words, especially in English, and especially in languages that use alphabets. Video and audio are a different matter, though, but it’s not entirely clear how helpful that type of data is going to be for generative AI that’s not trying to do visual things like move around in the world or create an image.

Ilya Sustkever, one of the founders of OpenAI and absolutely on the cutting edge of the field, said at a conference a couple of weeks ago that data was the “fossil fuel” of AI. “We have but one internet,” he said, and once we have all that data in our training corpus, that’s it.

The solution is synthetic data, having LLMs create data and then use that data to train a larger and more advanced model. It sounds plausible at first, especially if you think of how the chess and Go systems were trained: instead of just looking at all the records of human games, they played against each other and made perhaps billions of absurd no-human-would-do-it moves in millions of games. The moves that won games were given a positive grade, the ones that didn’t were graded down, and over time the system learned on its own how to win consistently. The systems got so good that very advanced Grand Master-level Go players would improve their own games by seeing some of the outlandish inventions by the AI software. Humans never thought to do that, but the AI did because it trained on virtually all of the possibilities.

Synthetic data also sounds absolutely absurd. You’re going to ask Claude 3.5 to write fifty poems about leaves falling on snow and then throw that into a pile to train Claude 4 to get better at poetry? Are you nuts? It’s especially absurd if you stopped paying attention somewhere around ChatGPT 4 in late 2023 or early 2024, when it would hallucinate on a regular basis and turn out absolute junk that made no sense. Grammatically correct English sentences, to be sure, but flat and dull and unremarkable. Slop, as it’s called perjoratively.

Using slop to train a model will only produce more slop. A future where our synthetic overlords drown us in oceans of bland and tasteless slop, force-feeding use cheap gruel and draining all of the quirky, edgy, interesting, emotional — the real and human — out of the world. Synthetic slop as far as the eye can see, grays and blacks with no color, the fading light of humanity struggling amidst the ever-encroaching darkness.

The argument, as most prominently expressed by Shumailov and Shumaylov et al., gets very mathematical. Published in Science in July 2024, it is worth exploring in detail. I’m told it’s been passed around in policy circles in DC since it was submitted for publication in October 2023, and is highly influential among people making law and working at think tanks on how to legislate and regulate AI. It’s unclear to me whether it played a role in the SB 1047 fight this past summer, however; that seemed to me to be more about liability for model misuse.

Just to define our terms up front, “model collapse is a degenerative process affecting generations of learned generative models, in which the data they generate end up polluting the training set of the next generation. Being trained on polluted data, they then mis-perceive reality.” Sounds pretty stark. They show some statistical methods to develop this theoretical intuition and conclude that “both early model collapse, in which only the low-probability events get cut off, and late stage model collapse, in which the process begins to collapse into a single mode, must arise in the case of discrete distributions with perfect functional approximation.” (emphasis mine)

The authors then run a series of experiments to test out this theoretical understanding, training a model on its own outputs for five generations and then statistically sampling the diversity of the outputs. It shows this convergence and sharply reduced perplexity over time.

But here’s exactly where it falls apart, and it seems to me that their methodology is, to put it candidly, ridiculous — and more than that, filled with the worst sort of excuse-making. I have a bit of sympathy for them since this field is moving at light speed. The last three months of 2024 alone had mind-blowing advances, from the release of OpenAI’s o1 to their preview of o3 in December to DeepSeek’s v3 put up some state-of-art results on evals using only $6 million in compute resources on hardware from two generations ago.

The Shamailov paper was submitted in October 2023 and published in July 2024, so it was already well behind the curve when it finally came out. But even in October 2023, it wasn’t anywhere close to the frontier.

The researchers sniff that “We can easily replicate all experiments covered in this paper with larger language models in non-fine-tuning settings to demonstrate model collapse.” But they don’t. Why not, if you’re making these bold claims in the world’s most prominent scientific journal? “Given that training a single moderately large model produces twice the American lifetime’s worth of CO2 (ref. 15), we opted to not run such an experiment and instead focus on a more realistic setting for a proof of concept.”

Nonsense. Absolute nonsense. Just be honest and say “our department chair wouldn’t let us use the big computers for this” instead of making some moralizing, pearl-clutching dreck about carbon emissions — which by the way footnote 15 is a citation to a 2019 paper estimating compute resources and electrical generation sources that are way out of date. They could’ve trained on a server in Texas using wind and solar, or done it in France where the grid is 80% nuclear, or bought offsets if they were genuinely concerned about climate.

So what model did they use instead of a “moderately large model”? Here’s where it gets even more ridiculous. They used the OPT-125m from Meta as hosted on Hugging Face and say that “even the language experiments described in this paper took weeks to run.”

One hundred and twenty-five million parameters. Million with an M. Reader, I have an 8 billion parameter model running on my iPhone 16, and it’s considered a small model. I can run a 70b parameter model at decent speeds using a two year old Mac Studio with an M2 Ultra chip.

Which is all to say, of course they found model collapse and that the synthetic data generated by this tiny little toy model was buggy and weak, and training runs built on top of its output would collapse to a delta function.

It’s like complaining that you can’t enlarge a picture without having things get fuzzy. You do some math and say, See? It loses resolution as we zoom in for 100x and then another 100x and another 100x. Then you do a test with a 150kb digital image from a flip phone in 2002 and say, See? We blew it up 100x and it no longer looks like a rhododendron. Of course we didn’t use a more modern camera, do you know what those things cost and anyway we don’t want to endorse conspicuous consumption because we’re morally superior academics.

When in reality, using a decent camera with a 48 megapixel image blows up quite nicely even to massive scale and yes it’s fuzzy (or fuzzier than the original) but it’s still a rhododendron and you can see a ton of detail.

I honestly doubt whether we’ll end up living in a world of slop. Our current models are so vastly superior to the ones we had in 2023, and advancing rapidly. Ilya may be right that we’re running out of non-synethic data but I question whether that’s ultimately meaningful. Maybe we have more than we need to create objects of real beauty and enable a world of abundance and delight. Maybe our human resolution just isn’t all that sharp. Sure, in a mathematical sense pushing the models for another couple of orders of magnitude will break down. Possibly. But we can’t say for sure from here and all the actual, real-world developments even since this paper came out in July 2024 demonstrate convincingly that we’re nowhere close to collapse.

Keep building.